LEAP MOTION TECHNOLOGY

The manner in which we interact with our computers is outdated. We still slide a pointing device on a flat surface or our index finger on a touchscreen. We are speaking to our computers through 2D motion and buttons (left, right, up, down and left click, right click) when we live in a 3D world. But our interaction with computers is about to jump a notch with the introduction of Leap Motion, a small device which allows you to control your computer by gesturing your hands and fingers in mid-air. It's quite awesome and the potential uses of this technology are only now being tapped into.

The manner in which we interact with our computers is outdated. We still slide a pointing device on a flat surface or our index finger on a touchscreen. We are speaking to our computers through 2D motion and buttons (left, right, up, down and left click, right click) when we live in a 3D world. But our interaction with computers is about to jump a notch with the introduction of Leap Motion, a small device which allows you to control your computer by gesturing your hands and fingers in mid-air. It's quite awesome and the potential uses of this technology are only now being tapped into.

Leap Motion works by effectively capturing any motion in its workspace (the ether above it) and translating it onto the computer. It does this through its array of camera sensors which monitor the 1 cubic feet workspace. It is also extremely accurate (to 0.01 mm) and distinguishes between your 10 fingers and tracks them individually. This is quite a drastic change from one hand stuck on a mouse or the characteristic 2-finger pinch-to-zoom on novel trackpads and smartphones. By dangling 10 fingers in the workspace, you can effectively communicate with a computer in many more ways. And not only can Leap Motion track fingers, it can also track the pen you are holding or a pair of chopsticks! In the demonstration video, you can see someone maneuvering a pair of chopsticks to virtually hold and pull an Angry Bird onto a slingshot. This might sound a bit silly but watch the video and your mind will be blown.

But wait, there are more great news. For one, to use Leap Motion, you only have to plug it into your computer's USB port and install a piece of software. That's right! And it will only cost $70 when it comes out later this year. What more, there are indications that the technology will be integrated in laptops and other devices in the future.

But Leap Motion has the potential to be much more than the next iteration of the mouse. A quick walk-around in the official forum and you'll be exposed to a myriad of great ideas, some incredibly innovation and others just plain fun. Here's a rundown:

1. 3D scanning. Since Leap Motion can essentially track and map anything with supreme accuracy in the workspace, it can easily be devised to scan an object in 3D. Now if you connect a 3D printer to the Leap Motion device, you can actually get a full-blown replicator!

2. Interaction with virtual 3D models. This is an obvious use of Leap Motion. Architects and engineers can easily explore a 3D model on their computers by virtually rotating, zooming in and out, etc. Perhaps surgeons may also find this useful though as they can explore scans and other records without removing their sterile gloves.

3. Sign language. As an accessibility tool for deaf people, Leap Motion may allow for a more intuitive experience.

4. Gaming. Angry Birds and chopsticks is just the beginning.

5. TV remote replacement. The couch will be our home.

6. Directing a virtual orchestra. This can be fun but can also be the perfect tool for conductors.

7. Dental opportunities. You can stick in a smaller iteration of the Leap Motion device in your mouth and spin it around a little to get a full model of your teeth. This is a subset of 3D scanning but I could not not mention such dentist fun.

It is clear that Leap Motion may have tremendous implications for science. After all, 3D scanning, 3D printing and interaction with virtual 3D models have already been put to use by scientists. For instance, Dr. Lacovara at Drexel University,

Philadelphia, announced earlier this year that her team would scan the university's fossil collection and print smaller-sized replicas. Leap Motion can greatly accelerate the process.

As Leap Motion opens its platform to developers, coders and other enthusiasts, the technology appears to transcend the boundaries of geekiness and tech gimmickry. This one definitely has the potential to be revolutionary.

So, are we on track for a Minority Report future? Only time will tell. In the meantime, I'm going to do some pushups... don't want to get sore muscles, do I?

From the earliest hardware prototypes to the latest tracking software, the Leap Motion platform has come a long way. We’ve gotten lots of questions about how our technology works, so today we’re taking a look at how raw sensor data is translated into useful information that developers can use in their applications.

Hardware

From a hardware perspective, the Leap Motion Controller is actually quite simple. The heart of the device consists of two cameras and three infrared LEDs. These track infrared light with a wavelength of 850 nanometers, which is outside the visible light spectrum.

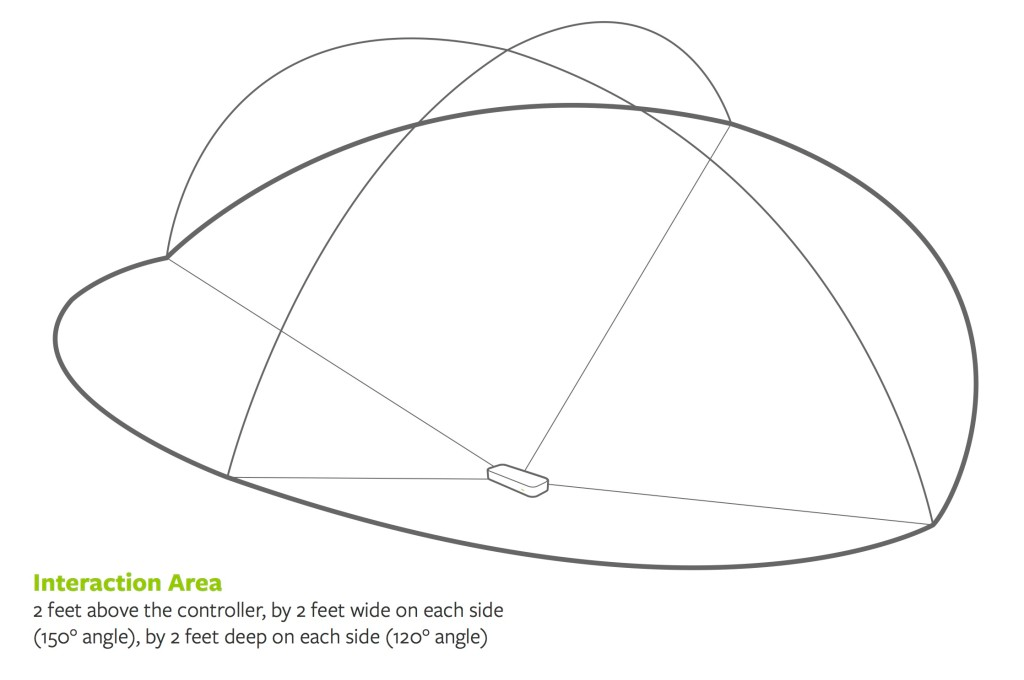

Thanks to its wide angle lenses, the device has a large interaction space of eight cubic feet, which takes the shape of an inverted pyramid – the intersection of the binocular cameras’ fields of view. The Leap Motion Controller’s viewing range is limited to roughly 2 feet (60 cm) above the device. This range is limited by LED light propagation through space, since it becomes much harder to infer your hand’s position in 3D beyond a certain distance. LED light intensity is ultimately limited by the maximum current that can be drawn over the USB connection.

At this point, the device’s USB controller reads the sensor data into its own local memory and performs any necessary resolution adjustments. This data is then streamed via USB to the Leap Motion tracking software.

Because the Leap Motion Controller tracks in near-infrared, the images appear in grayscale. Intense sources or reflectors of infrared light can make hands and fingers hard to distinguish and track. This is something that we’ve significantly improved with our v2 tracking beta, and it’s an ongoing process.

Software

Once the image data is streamed to your computer, it’s time for some heavy mathematical lifting. Despite popular misconceptions, the Leap Motion Controller doesn’t generate a depth map – instead it applies advanced algorithms to the raw sensor data.

The Leap Motion Service is the software on your computer that processes the images. After compensating for background objects (such as heads) and ambient environmental lighting, the images are analyzed to reconstruct a 3D representation of what the device sees.

Next, the tracking layer matches the data to extract tracking information such as fingers and tools. Our tracking algorithms interpret the 3D data and infer the positions of occluded objects. Filtering techniques are applied to ensure smooth temporal coherence of the data. The Leap Motion Service then feeds the results – expressed as a series of frames, or snapshots, containing all of the tracking data – into a transport protocol.

Through this protocol, the service communicates with the Leap Motion Control Panel, as well as native and web client libraries, through a local socket connection (TCP for native, WebSocket for web). The client library organizes the data into an object-oriented API structure, manages frame history, and provides helper functions and classes.

From there, the application logic ties into the Leap Motion input, allowing a motion-controlled interactive experience. Next week, we’ll take a closer look at our SDK and getting started with our API.

0 comments:

Post a Comment